Analysis of Single Assessment Framework Reports: Insights from Care 4 Quality

By Robyn Drury

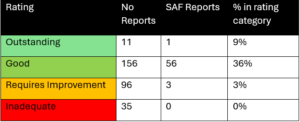

In the current climate with the Care Quality Commission (CQC) changing its inspection methodology to the new single assessment framework, Care 4 Quality have conducted an extensive analysis of 296 reports published on the CQC website over the past month. Within this treasure trove of data, we unearthed valuable insights that offer a deeper understanding of the nuances inherent in CQC assessments so far.

Key Findings:

Out of the 296 reports published, only 60 so far were under the new single assessment framework (SAF).

Adult social care (ASC) dominated these assessments, comprising a staggering 72% of the total.

Report Breakdown:

For any quality statement that are reviewed, the CQC are rating as follows:

- 4 for each quality statement where the key question is rated as ‘Outstanding’.

- 3 for each quality statement where the key question is rated as ‘Good’.

- 2 for each quality statement where the key question is rated as ‘Requires Improvement’.

- 1 for each quality statement where the key question is rated as ‘Inadequate’.

For quality statements that have not been reviewed, the scores will be based on previous inspection reports. For instance, a ‘Good’ rating in safety would correspond to all 3’s in any quality statements that were not checked.

GP Services Evaluation: The Care Quality Commission recently published reports on 15 GP services, with a particular emphasis on one of the Responsive Quality statement’s; Equity in access. The CQC has stated that they are currently conducting assessments of GPs to gain insights into how practices are addressing the increasing demand for access and to enhance their understanding of patient experiences. Access to general practice continues to be a key concern for the public. Out of the 15 services evaluated, 14 received a score of 3 (an indication of a ‘Good’ standard), indicating that they are making reasonable efforts to ensure access in the current environment.

Upon reviewing the adult social care reports pertaining to residential care services, nursing homes, and home care services, it was found that 19 out of the 43 reports (44%) were assessed against five key Quality Statements (QS), with a focus on the following areas:

- Safe – Safeguarding, Involving people to manage risk, and Safe and effective staffing.

- Caring – Independence, choice and control

- Responsive – Equity in experiences and outcomes

The remaining 66% of reports were evaluated against various QS, ranging from 1 to all 33, with an average of 7 looked at usually touching on at least 3 of the 5 above quality statements.

It is worth noting that the majority of the services previously held ‘Good’ ratings, and many have not undergone inspections since 2017 or 2018. This raises the question of whether the governance of these services, a critical component of quality assurance, has been adequately addressed in the absence of being one of the core Quality statements reviewed. This aspect is particularly vital for any service, especially those that have not been inspected in over six years.

The CQC have addressed this in their most recent blog:

“Key feedback we’ve heard has been around the number of quality statements that are being used to assess services, and whether this means assessments, and therefore scores, are being based on out-of-date evidence. We know how important it is for the public, providers and stakeholders that we share an up-to-date view of quality. We must also balance the need to undertake more assessments with ensuring we review as much new evidence as possible in each assessment.”

Variability in QS Review:

We conducted a further analysis of the differences in reports and their findings. It is worth noting that one report reviewed all 33 Quality Standards (QS) of a previously rated Inadequate service, resulting in an upgrade to a ‘Good’ rating.

Similarly, another report reviewed 24 QS of a previously rated ‘Requires Improvement’ service, leading to an improved rating as well.

In a positive light, the services that had regulatory breaches had these specific areas targeted for review, as anticipated. However, in some cases, only 4 or 5 QS were reviewed therefore, despite improvements in most areas, these services did not receive higher domain ratings or a more comprehensive review. Others were assessed in various domains, including Safe and Well Led, receiving an improved rating. However, they were still classified as Requires Improvement in other domains due to lack of review.

In one case, a service that was previously rated as ‘Good’ had 18 QS reviewed, with 17 of them rated as a score of 4. This service received an ‘Outstanding’ rating, which is commendable given the concerns in the sector about achieving high ratings with limited reviews. However, it raises questions about the rationale behind providing this service with a more extensive review compared to others, and whether this approach is equitable.

The inconsistency in reviews thus far is causing some confusion; however, the CQC has made efforts to clarify its approach for the future. In a recent blog post, they stated:

“When carrying out an assessment of a service that is either inadequate or ‘requires Improvement’ all quality statements under the key question that are rated ‘Inadequate’ or ‘Requires Improvement’ will be reviewed. It has always been true that a provider with many key questions rated as ‘Requires Improvement’ will require significantly more work to re-rate as ‘Good’ than one key question rated as ‘Requires Improvement’. That does not change in the new approach, though the amount of work per key question rating is reduced.”

This contrasts with the current findings in the early stages of assessment.

Looking at individual scores:

During our evaluation, it was noted that in some areas, such as medication management and infection control, there were concerns identified on-site. However, services sometimes received a rating of 3 (‘Good’ standard) despite these issues. Although these concerns were noted through processes and observations, the overall rating of 3 may have been influenced by positive feedback from service users, staff, and partners, which is an important factor in determining the final quality score. As CQC are not yet publishing evidence category scores, it remains to be seen but they have indicated they may well do this in the future.

The weighting of different evidence categories by the CQC is currently assumed to be equal, raising concerns about how various aspects of care are prioritised. It is important to consider whether factors like perceptions of medication safety from stakeholders should hold the same weight as actual process compliance during inspections.

The criteria for identifying “shortfalls” and “significant shortfalls ” determining a score of 2 or 1 respectively, remain unclear, creating inconsistencies in the reports we have reviewed. We acknowledge that these reports mainly reflect data from December and January, when the new evaluation process was first implemented so the new methodology is not yet embedded across CQC themselves.

Inconsistency in Review Duration and Methodologies:

Variations were noted in the duration of reviews, with some lasting only a couple of days, while others extended beyond a month. The information provided on the CQC website regarding when a service was visited was inconsistent, as some overall summaries included this detail while others did not. There were instances where an onsite visit was conducted, and the desktop review process took over a month to finalise. Conversely, some services had an onsite visit followed by a brief desktop review spanning only a few days. Additionally, there were cases where no onsite visit was conducted at all, which falls in line with what CQC has advised about the shift towards more remote assessments. However, the reports fail to provide clear explanations for the decisions made currently.

Conclusion

Overall, there is mixed feedback from providers on the new inspection method so far, some have had an excellent experience advising they felt the inspection process with the offsite work allowed it to be “more manageable, less stressful, and more aligned with the goal of truly assessing the quality of care provided.”

However, challenges remain in achieving consistency, fairness, and transparency in assessments. Despite these challenges, there is optimism for a more robust and effective evaluation framework through ongoing refinement and collaboration.

In conclusion, we anticipate future developments with interest and look forward to receiving more data on services previously rated as ‘Requiring Improvement’ and ‘Inadequate’. It is our hope that these services will receive the necessary focus to evidence their improvement, as indicated by the CQC, advising:

“We know as well that providers are keen to understand the planned frequency of assessments — when might they reasonably expect to have an assessment? We’re building that information at present, using the feedback and data we’re gathering during this period of transition”.

Demonstrating their commitment to dedicating substantial resources to evaluating services, the CQC announced in their update on April 9th that future assessments will be either “planned” or “responsive – based on concerns”. They stated that they will regularly review the SAF and associated timelines until June 2024, incorporating feedback from providers. Following this, they will develop risk-based plans, stating that “Once the new frequencies are decided, we will publish a more detailed schedule for planned assessments. This will include a date by when we will have updated ratings for all providers. This will signal the end to our transition period. We expect to publish this information at the start of July 2024”

While we eagerly wait for more information and remain attentive to the ongoing visits, for those seeking support in navigating the single assessment framework and other aspects of CQC assessments, Care 4 Quality stands ready to provide guidance and expertise. Contact us today to learn more about how we can assist your organisation on its quality improvement journey.